https://github.com/KupynOrest/DeblurGAN

GitHub - KupynOrest/DeblurGAN: Image Deblurring using Generative Adversarial Networks

Image Deblurring using Generative Adversarial Networks - GitHub - KupynOrest/DeblurGAN: Image Deblurring using Generative Adversarial Networks

github.com

https://arxiv.org/abs/1711.07064

DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks

We present DeblurGAN, an end-to-end learned method for motion deblurring. The learning is based on a conditional GAN and the content loss . DeblurGAN achieves state-of-the art performance both in the structural similarity measure and visual appearance. The

arxiv.org

토대로 공부하고 작성했습니다.

저번에는 논문 내용을 중심으로 봤습니다. 이번에는 Github에 올라온 코드를 살펴보겠습니다.

먼저 파일들이 여러 개 있습니다. 그중 options을 보면

base_options, test_options, train_options이 존재하는데 각 상황에 맞게 매개변수들을 설정해주는 코드입니다. base_options에 대해서 살펴보면

import argparse

import os

from util import util

import torch

class BaseOptions():

def __init__(self):

self.parser = argparse.ArgumentParser()

self.initialized = False

def initialize(self):

#TEST

#self.parser.add_argument('--dataroot', type=str, default="D:\Photos\TrainingData\BlurredSharp\combined", help='path to images (should have subfolders trainA, trainB, valA, valB, etc)')

self.parser.add_argument('--dataroot', type=str, default='datasets\AB', help='path to images (should have subfolders trainA, trainB, valA, valB, etc)')

self.parser.add_argument('--batchSize', type=int, default=1, help='input batch size')

self.parser.add_argument('--loadSizeX', type=int, default=640, help='scale images to this size')

self.parser.add_argument('--loadSizeY', type=int, default=360, help='scale images to this size')

self.parser.add_argument('--fineSize', type=int, default=256, help='then crop to this size')

self.parser.add_argument('--input_nc', type=int, default=3, help='# of input image channels')

self.parser.add_argument('--output_nc', type=int, default=3, help='# of output image channels')

self.parser.add_argument('--ngf', type=int, default=64, help='# of gen filters in first conv layer')

self.parser.add_argument('--ndf', type=int, default=64, help='# of discrim filters in first conv layer')

self.parser.add_argument('--which_model_netD', type=str, default='basic', help='selects model to use for netD')

self.parser.add_argument('--which_model_netG', type=str, default='resnet_9blocks', help='selects model to use for netG')

self.parser.add_argument('--learn_residual', action='store_true', help='if specified, model would learn only the residual to the input')

self.parser.add_argument('--gan_type', type=str, default='wgan-gp', help='wgan-gp : Wasserstein GAN with Gradient Penalty, lsgan : Least Sqaures GAN, gan : Vanilla GAN')

self.parser.add_argument('--n_layers_D', type=int, default=3, help='only used if which_model_netD==n_layers')

self.parser.add_argument('--gpu_ids', type=str, default='0', help='gpu ids: e.g. 0 0,1,2, 0,2. use -1 for CPU')

self.parser.add_argument('--name', type=str, default='experiment_name', help='name of the experiment. It decides where to store samples and models')

self.parser.add_argument('--dataset_mode', type=str, default='aligned', help='chooses how datasets are loaded. [unaligned | aligned | single]')

self.parser.add_argument('--model', type=str, default='content_gan', help='chooses which model to use. pix2pix, test, content_gan')

self.parser.add_argument('--which_direction', type=str, default='AtoB', help='AtoB or BtoA')

self.parser.add_argument('--nThreads', default=2, type=int, help='# threads for loading data')

self.parser.add_argument('--checkpoints_dir', type=str, default='./checkpoints', help='models are saved here')

self.parser.add_argument('--norm', type=str, default='instance', help='instance normalization or batch normalization')

self.parser.add_argument('--serial_batches', action='store_true', help='if true, takes images in order to make batches, otherwise takes them randomly')

self.parser.add_argument('--display_winsize', type=int, default=256, help='display window size')

self.parser.add_argument('--display_id', type=int, default=1, help='window id of the web display')

self.parser.add_argument('--display_id', type=int, default=0, help='window id of the web display')

self.parser.add_argument('--display_port', type=int, default=8097, help='visdom port of the web display')

self.parser.add_argument('--display_single_pane_ncols', type=int, default=0, help='if positive, display all images in a single visdom web panel with certain number of images per row.')

self.parser.add_argument('--no_dropout', action='store_true', help='no dropout for the generator')

self.parser.add_argument('--max_dataset_size', type=int, default=float("inf"), help='Maximum number of samples allowed per dataset. If the dataset directory contains more than max_dataset_size, only a subset is loaded.')

self.parser.add_argument('--resize_or_crop', type=str, default='resize_and_crop', help='scaling and cropping of images at load time [resize_and_crop|crop|scale_width|scale_width_and_crop]')

self.parser.add_argument('--no_flip', action='store_true', help='if specified, do not flip the images for data augmentation')

self.initialized = True

def parse(self):

if not self.initialized:

self.initialize()

self.opt = self.parser.parse_args()

self.opt.isTrain = self.isTrain # train or test, test인 경우 false 저장

str_ids = self.opt.gpu_ids.split(',')

self.opt.gpu_ids = []

for str_id in str_ids:

id = int(str_id)

if id >= 0:

self.opt.gpu_ids.append(id)

# set gpu ids

if len(self.opt.gpu_ids) > 0:

torch.cuda.set_device(self.opt.gpu_ids[0])

args = vars(self.opt)

print('------------ Options -------------')

for k, v in sorted(args.items()):

print('%s: %s' % (str(k), str(v)))

print('-------------- End ----------------')

# save to the disk

expr_dir = os.path.join(self.opt.checkpoints_dir, self.opt.name)

util.mkdirs(expr_dir)

file_name = os.path.join(expr_dir, 'opt.txt')

with open(file_name, 'wt') as opt_file:

opt_file.write('------------ Options -------------\n')

for k, v in sorted(args.items()):

opt_file.write('%s: %s\n' % (str(k), str(v)))

opt_file.write('-------------- End ----------------\n')

return self.opt

이와 같이 코드가 작성되어 있습니다.

그 다음 datasets 폴더를 보면

combine_A_and_B.py 코드가 있는데

from pdb import set_trace as st

import os

import numpy as np

import cv2

import argparse

parser = argparse.ArgumentParser('create image pairs')

parser.add_argument('--fold_A', dest='fold_A', help='input directory for image A', type=str, default='../dataset/50kshoes_edges')

parser.add_argument('--fold_B', dest='fold_B', help='input directory for image B', type=str, default='../dataset/50kshoes_jpg')

parser.add_argument('--fold_AB', dest='fold_AB', help='output directory', type=str, default='../dataset/test_AB')

parser.add_argument('--num_imgs', dest='num_imgs', help='number of images',type=int, default=1000000)

parser.add_argument('--use_AB', dest='use_AB', help='if true: (0001_A, 0001_B) to (0001_AB)',action='store_true')

args = parser.parse_args()

# 매개변수 선언, 이미지 저장할 폴더 위치

for arg in vars(args):

print('[%s] = ' % arg, getattr(args, arg))

splits = os.listdir(args.fold_A)

for sp in splits:

img_fold_A = os.path.join(args.fold_A, sp)

img_fold_B = os.path.join(args.fold_B, sp)

# input directory 두 폴더에 존재하는 파일들 불러옴

img_list = os.listdir(img_fold_A)

if args.use_AB:

img_list = [img_path for img_path in img_list if '_A.' in img_path]

num_imgs = min(args.num_imgs, len(img_list))

print('split = %s, use %d/%d images' % (sp, num_imgs, len(img_list)))

img_fold_AB = os.path.join(args.fold_AB, sp)

if not os.path.isdir(img_fold_AB):

os.makedirs(img_fold_AB)

# img_fold_AB 폴더가 없으면 생성

print('split = %s, number of images = %d' % (sp, num_imgs))

for n in range(num_imgs):

name_A = img_list[n]

path_A = os.path.join(img_fold_A, name_A)

if args.use_AB:

name_B = name_A.replace('_A.', '_B.')

else:

name_B = name_A

path_B = os.path.join(img_fold_B, name_B)

if os.path.isfile(path_A) and os.path.isfile(path_B):

name_AB = name_A

if args.use_AB:

name_AB = name_AB.replace('_A.', '.') # remove _A

path_AB = os.path.join(img_fold_AB, name_AB)

im_A = cv2.imread(path_A, cv2.IMREAD_COLOR)

im_B = cv2.imread(path_B, cv2.IMREAD_COLOR)

im_AB = np.concatenate([im_A, im_B], 1)

cv2.imwrite(path_AB, im_AB)

# path_A에 저장된 이미지 파일과 path_B에 저장된 이미지 파일을 컬러로 읽어와 서로 붙여주고 path_AB에 저장한다.

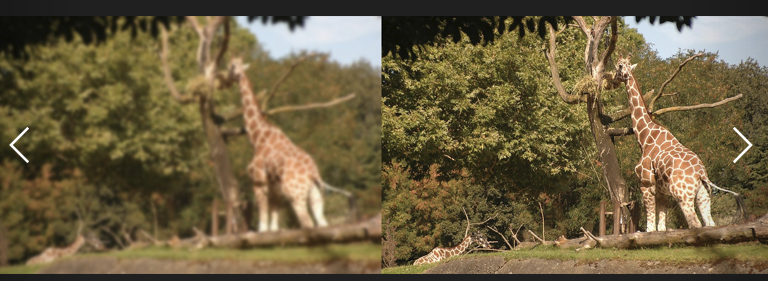

이와 같이 작성되어 있습니다. 이 코드는 이미지 파일이 존재하는 두 폴더에서 이미지를 서로 붙여서 새로운 폴더에 저장하는 코드입니다. 학습을

이와 같이 이미지 2장을 붙인 상태로 진행하기 위해 존재하는 코드입니다. Deblur를 위해 한쪽 이미지는 blur 처리한 이미지를 넣고 다른 한쪽에는 원본 이미지(sharp image)를 넣어줍니다.

'연구실 공부' 카테고리의 다른 글

| Image deblurring using DeblurGAN(base_model.py, models.py, test_models.py) (0) | 2022.03.09 |

|---|---|

| Image deblurring using DeblurGAN(data) (0) | 2022.03.09 |

| Image deblurring using DeblurGAN (0) | 2022.03.07 |

| Resnet, Unet, GAN 구조 (0) | 2022.02.25 |

| image-deblurring-using-deep-learning (0) | 2022.02.21 |